Fractured Star Projections

Human Creator Ledger

Pre-AI concept

Annotated documentation of pre-AI concept. Unrelated notes are blacked-out. Notes that are related but potentially embarrassing, as they were written down without thought of publication, were left in.

This project arose out of an interest in playing with what other people have asked AI

to do. On Midjourney's Discord channel, there is an archive of everything publicly

generated by users, alongside the prompts that they wrote and used. I had a

sociological/cultural interest in this, but I was also curious to try using the creative

constraints of not asking generative AI to make things to my specifications, but

relying on what other people asked of it. Having played with AI generation, myself, I

know that prompts vary from the purposeful and utilitarian to the spontaneous,

personal and revealing. In this way, it is kind of like looking at someone's web

searches, but with different imaginative boundaries.

There are other reasons why using the results of other people's generative AI

prompts was appealing to me. One is that it has almost no additional environmental

impact compared to using my own new prompts to generate images. At this time, the pursuit of "found" AI has not created incentives for the creation of more AI-generated

images.

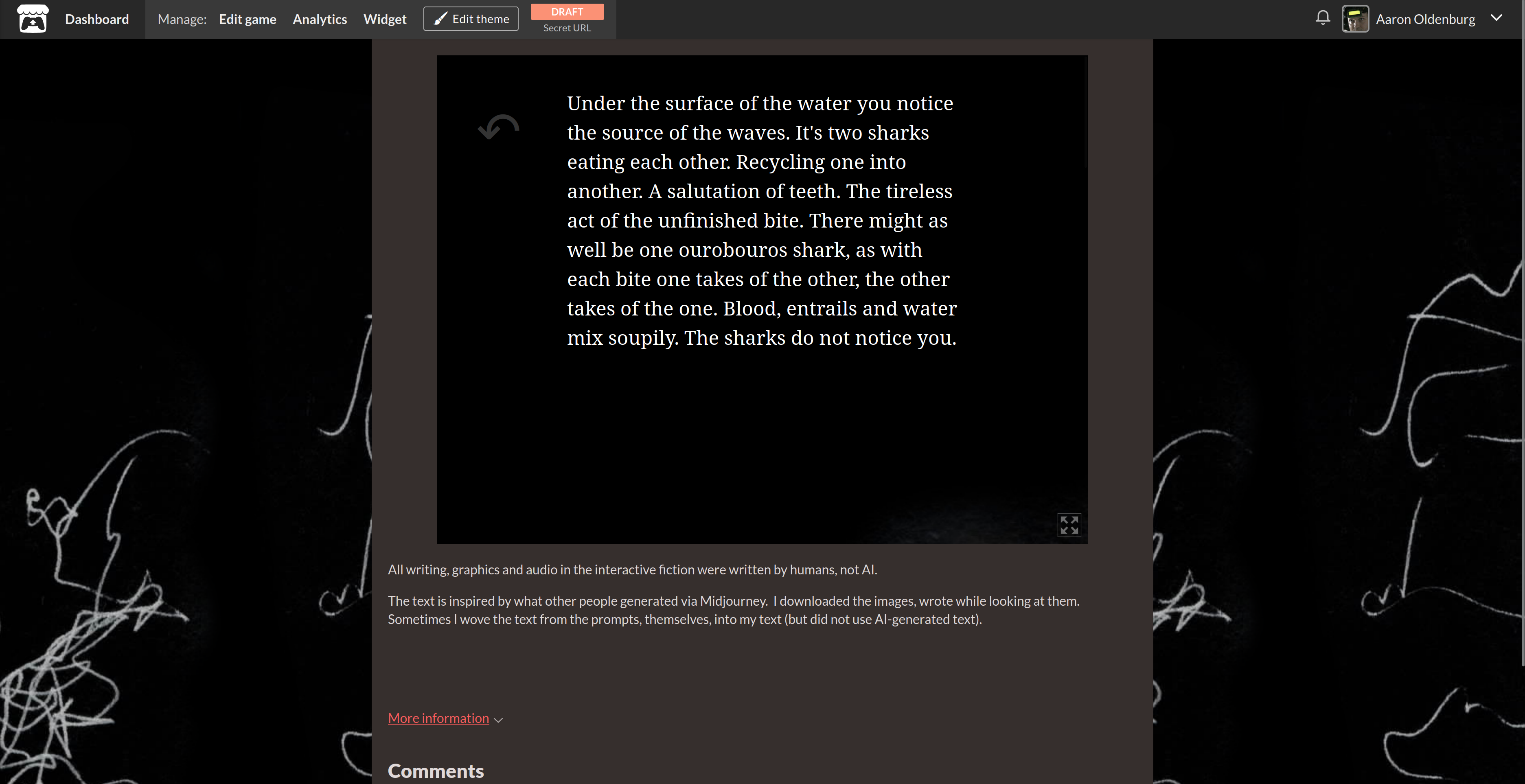

There is still the issue of AI plagiarizing others' work, which this process, on its own,

does not solve. However, in this project, I have chosen not to show the generated

works, themselves, but to use those images as writing, drawing, and audio prompts

for an interactive narrative. Although players will have access to an archive of these

images for reference, they, themselves, are not part of the final work.

In a nod to the plagiaristic nature of AI, I have included some stolen text of my own,

though: that of the prompts, which I occasionally weave into my narrative (the title,

itself, was taken from a prompt). I don't think I benefit unduly from this theft, as the

writing in prompts is not great. I considered visually highlighting the borrowed text,

but felt it distracting. I do make all of the prompts available in a text file separate from

the work.

I chose images based on intuitive pull. Most were dumb. Many were obsessive, with

the user trying double-digit attempts to get the perfect image with variations on a

prompt. With those, I often downloaded the entire series of images. I was not looking

for exciting or polished. Sometimes the pull for me was wondering what drove the

person toward that particular prompt. Sometimes it was the absurd amount of

specificity. Often, the work generated was not aesthetically interesting but the

prompt, itself, and the obsessive iterations, were.

I began world-building off of the descriptions of the work. I enjoyed playing with the

image variation and incorporating that slipperiness into my narrative. The narrative is

absurd and free-associative.

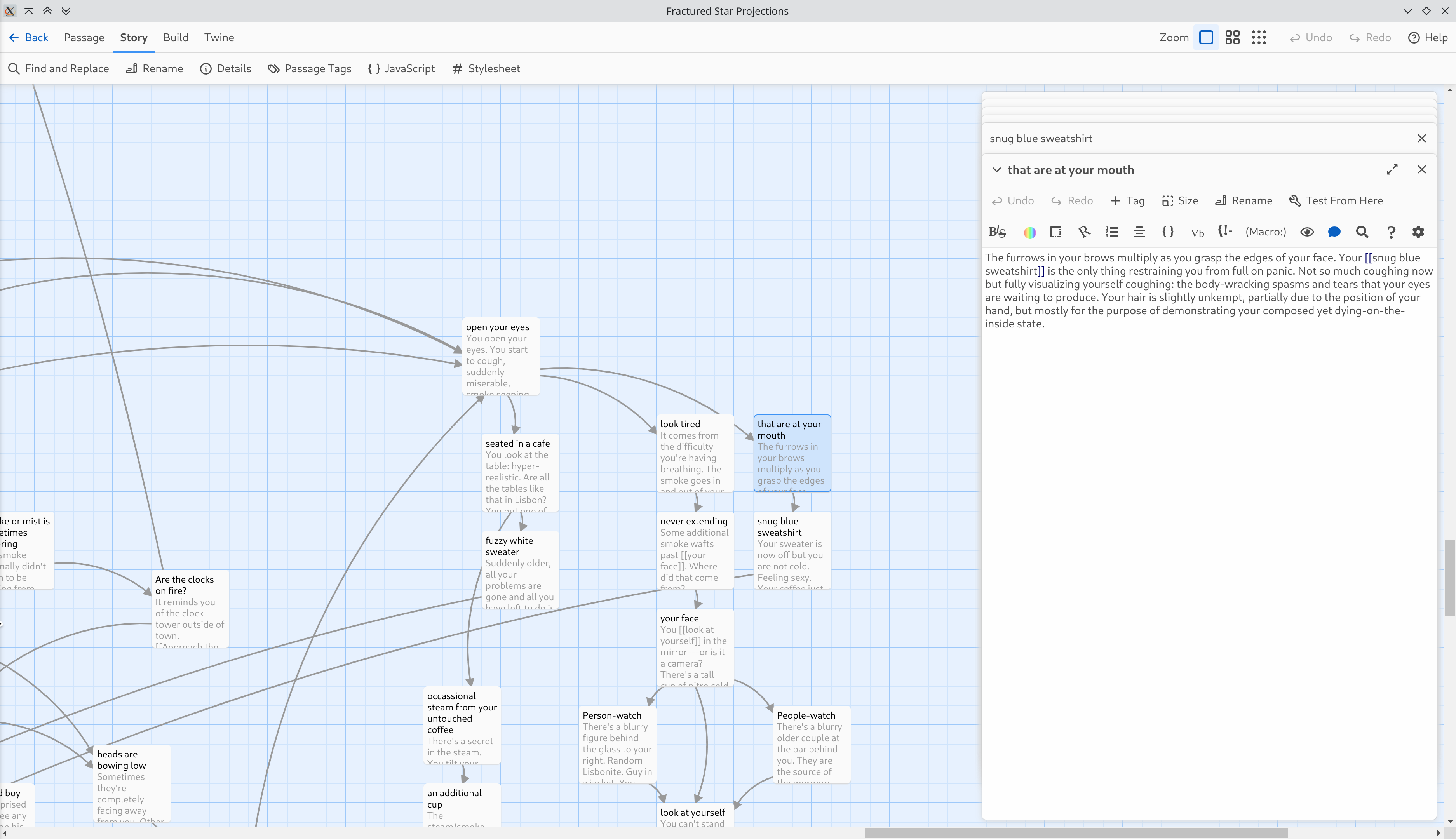

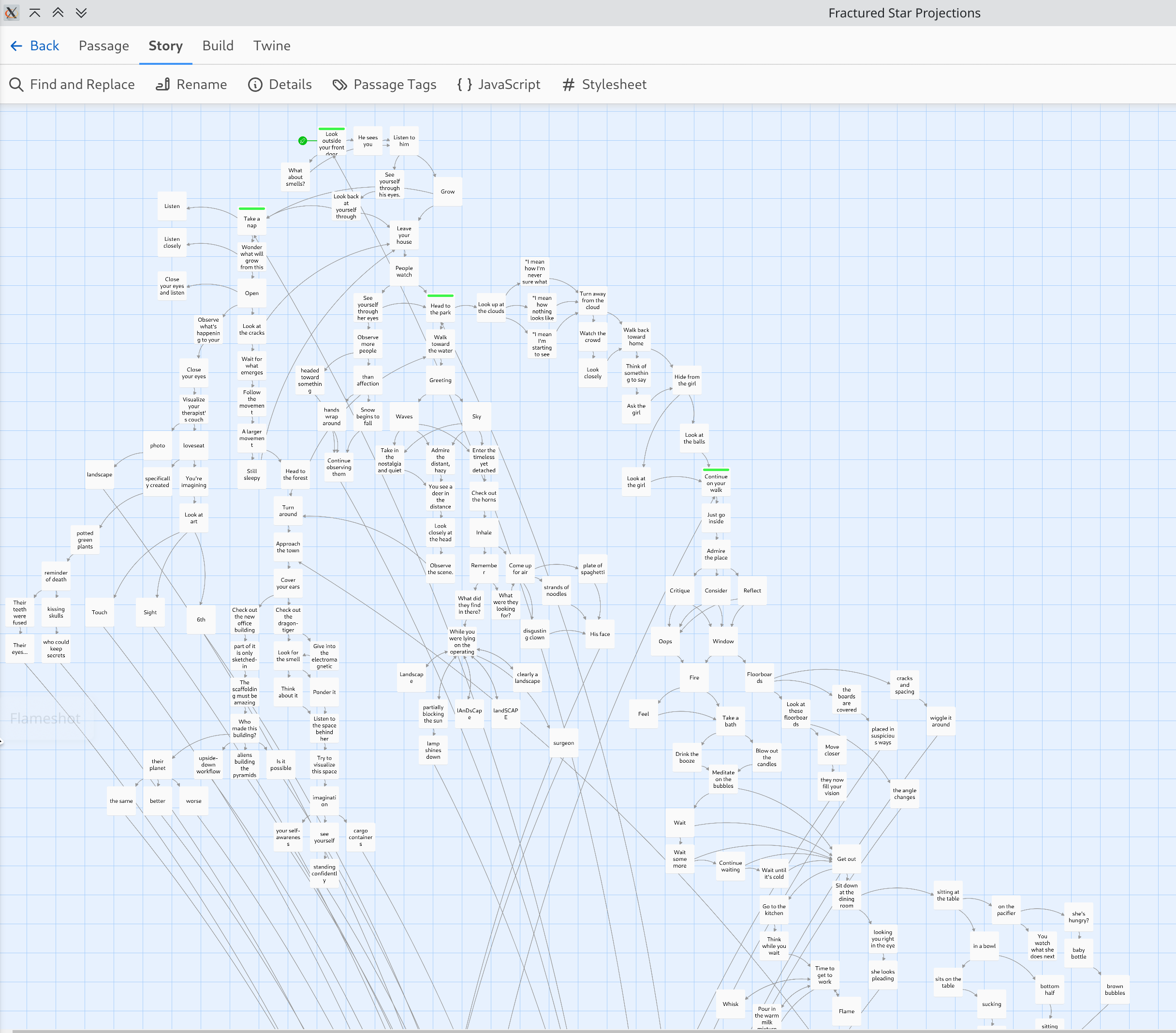

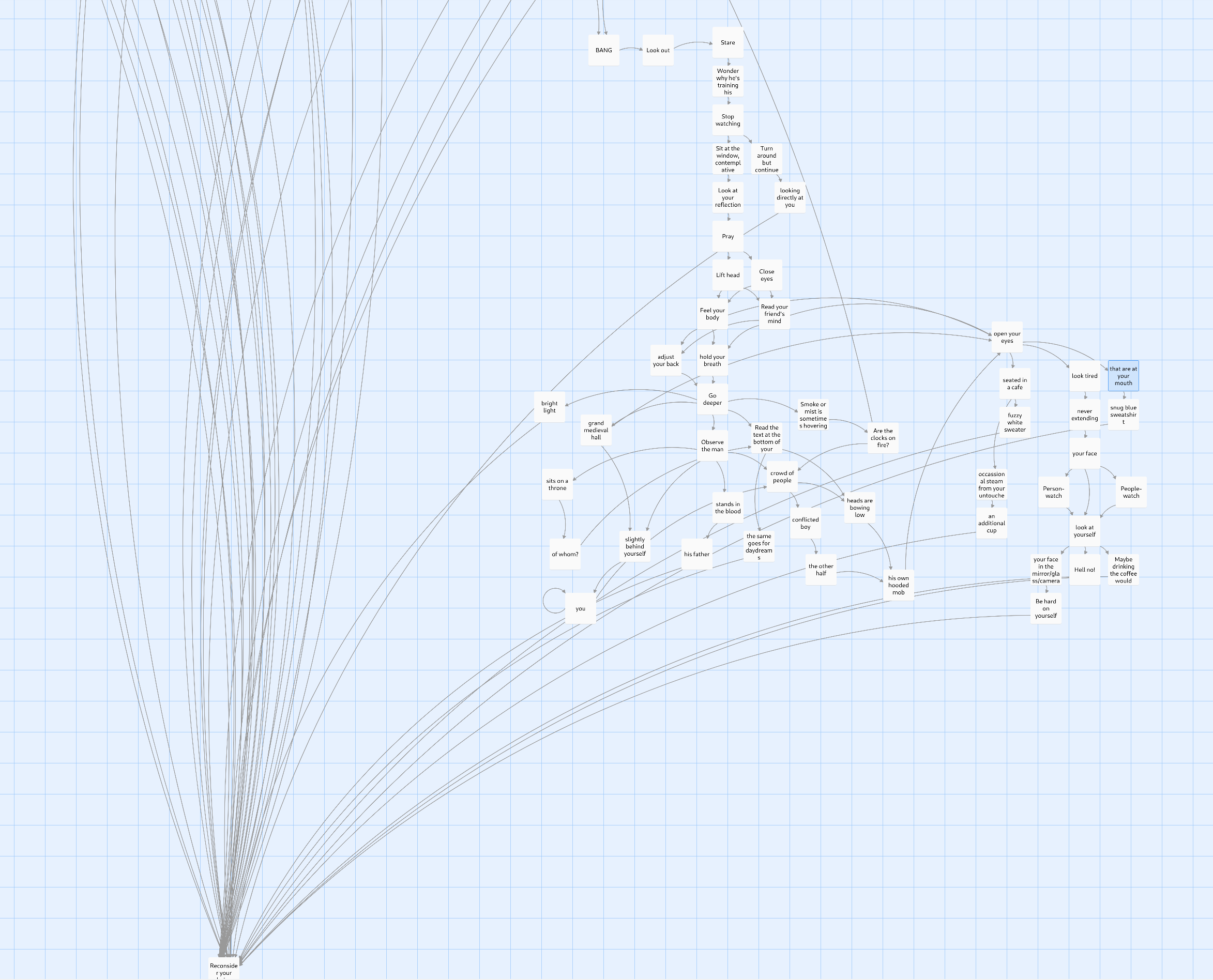

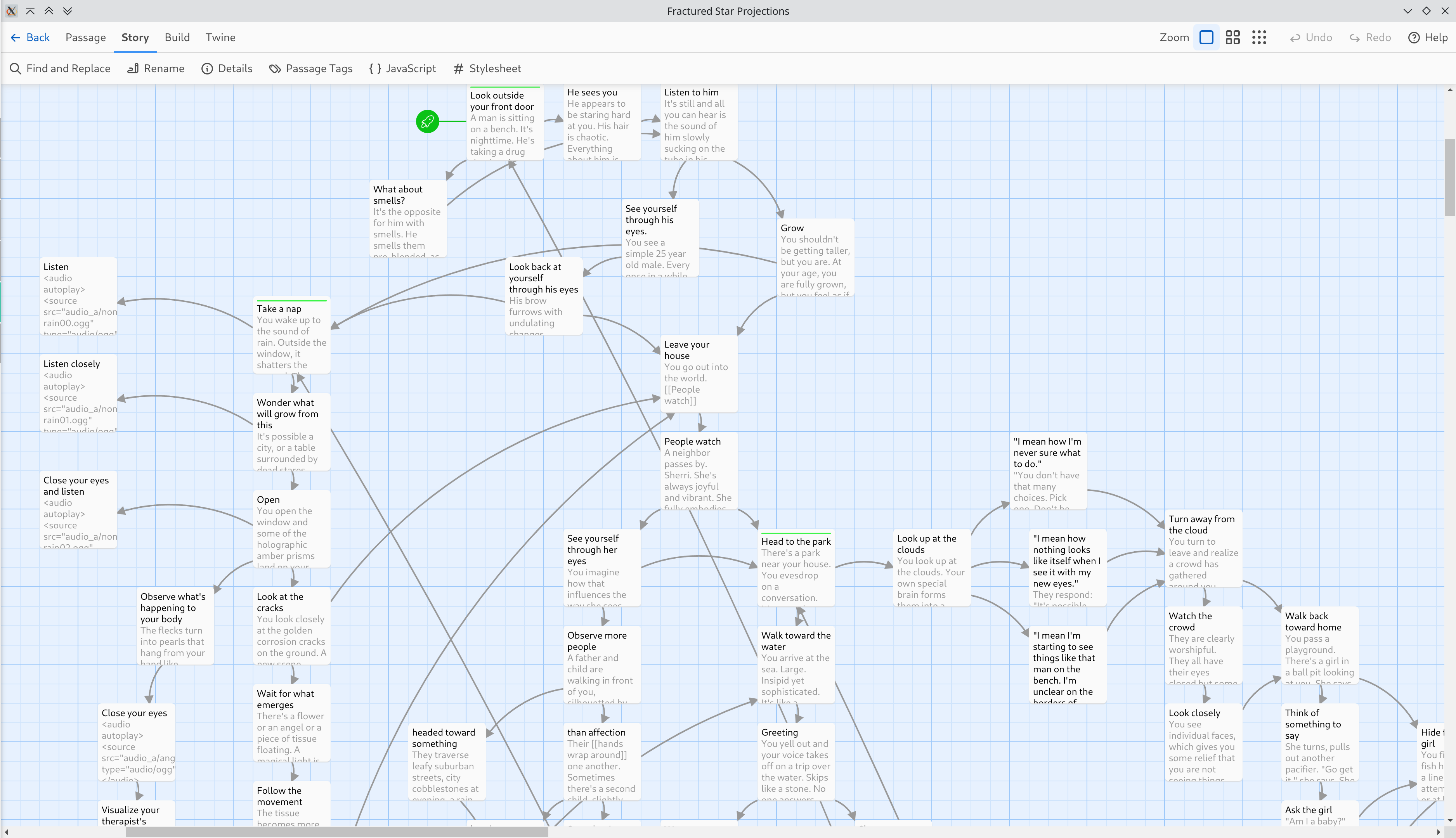

This was my first Twine project. Twine is a software that allows one to quickly build

interactive narratives and publish them as HTML. It gives one a nice bird's eye view

of what quickly becomes a sprawling flowchart. This stretched my creative writing

process.

I originally began with the intention of pairing my text with the images. The goal was

to undermine and reinterpret the images. However, Twine is not an ideal engine for

image representation, and I found that I preferred reading the text without the image.

With the image-less text removing us from what the prompt generated, we are now

required to create our own images in our heads (a process that should, of course, be

familiar to readers).

I feel like I am collaborating with the reader to organically recreate these

images that have been blandly and procedurally created via AI. I also like how

this process draws attention to how the human brain creates images, and what

similarities, if any, exist between our image creation and that of a digital neural

network.

To keep the focus on this process, I did include some images in the final product. I

made some of my own drawings of the AI-generated images. To do this, I used the

old drawing class technique where one looks only at the object being drawn and

never at the paper on which one is drawing. What one ends up with are loose

scribbles but also a sense of really seeing the contours of what one is looking at. The

process of creating drawings this way put my attention on how my brain takes in the

information from the image and translates that into arm movement. The vagueness

of the images also complements the narrative without hijacking it in the same way

inclusion of the original generated images would have.

I also responded to some images with sound effects, music, and narration that I

made. These appear on a handful of nodes.

Some of the narrative themes also touch on how our brains generate reality. The

story does not really end. Most of the threads stop with a choice to jump to an earlier

part of the narrative and explore different paths.

AI Co-Creator Profile

I used Midjourney. It was a software that I had experience using in the past. The

most important aspect of the software for this project was the public archive of past

prompts and outputs.

This project is different from most AI collaborations, as usually the artist is also the

one who writes the prompts. There is an enjoyment inherent in that space between

what one writes and what is output from the system. In this project, I see the end

results of that. Often, the reverse is surprising: I see the image initially, and

subsequently get a glimpse of the human behind the image by reading the prompt.

What they wanted versus what they received is often made painfully clear through

the number of iterations they made on the prompts.

I wove these iterations into the narrative. Often the character will have unexplained

changes. Some of these are mundane, such as the color and style of the character's

sweater changing in each description. Others play with the fact that AI doesn't

understand the physical reality of the scenes being depicted, and so the world I

create often abides by the rules of the AI generated images rather than those of

physical reality: disappearing hands, a floor that drips onto you after a bath.

There is a danger here of falling into cliche surrealism. But I think the process gets at

something surrealists were engaged in more deeply: attempting to find and inhabit

an underlying reality. This reality is metaphorically expressed through the opacity of

generative AI's code and training data.

Although the images, themselves, are not in the final piece, the process that

generates them inhabits my description and narrative. Sometimes I describe what I

see in the images fairly faithfully, and other times I use part of the image simply as a

launching point in an original narrative vignette.

All of this is in service to the old collaborative process between author and reader,

where we join together to generate ephemeral images in our biological neural

networks. Using AI helped me shine a light on this process.

Synthesis Process

I enjoyed the flow of going in and out of descriptive writing based on the picture I was

viewing, as loosely or as closely as I felt.

The process illuminated the contrast between the AI's limitations, seen in the back-

and-forth between prompter and generator, and what felt like my own lack lack of any

imaginative limitations. It was easy to write narrative that was constrained by the

images I happened to find. This did make it hard to tidily wrap up the eventually

sprawling narrative, let alone guide it toward any conventional plot.

I found this to be an inspiring creative exercise and have had thoughts of creating

additional works using found AI, or creating a game jam with this theme.